I woke up to six perfectly formatted pull requests in my GitHub notifications. Each contained clean code, proper documentation, and detailed explanations of a complete web application that had been built, tested, and deployed while I slept. This isn’t science fiction—this is Jules, Google’s new autonomous coding agent from Google Labs, and it’s already changing how I think about software development.

After years of coding, and more recently working with AI-generated code and agentic workflows, I was eager to test Jules in real production scenarios. What I found were some surprising insights into how Google’s approach to autonomous coding differs from other AI tools I’ve used. The real power of Jules isn’t just in writing code, but in challenging how we think about development workflows, bumps and all.

Google’s Jules represents a significant evolution in agentic coding architectures, moving beyond traditional LLM-assisted development toward true autonomous planning and execution. Built on Gemini 2.5 Pro, it operates within isolated Google Cloud VMs, “permission-based autonomy model”, where the agent proposes detailed action plans that require explicit human approval before execution.

🏗️ Agent Architecture and Execution Model

Jules functions as an asynchronous agent rather than a synchronous coding assistant. The system executes within isolated Google Cloud VMs, implementing a plan-approve-execute workflow that mirrors human code review processes, but with machine-level consistency.

This design pattern addresses a core challenge in agentic systems, the balance between autonomous capability and human oversight. Unlike tools that provide real-time suggestions or completions, Jules works like hiring a remote contractor who never gets tired and always documents their work properly.

But here’s where I hit my first significant snag. It repeatedly asked me: “Let me know when you’re ready to test the extension.” …even though that wasn’t possible directly in the tool. It kept insisting it was “explaining” what to do next, but didn’t explain anything. This wasted about ~10 minutes of back-and-forth before I manually guided it past this loop by restructuring my request entirely. Note this is the ONLY issue i’ve had so far, every other step and process had been seamless thus far.

🧪 Testing Jules Autonomous Capabilities

I tested Jules by building an new AI news aggregation tool with this prompt:

Task 1: Update Web Interface to "Namos Newsletter Resources Manager"

Update the page title and heading:

Change the page title to "Namos Newsletter Resources Manager"

Update the main heading to match

Modify the UI:

Remove the two separate buttons for tools and news

Add a single button labeled "Scrape All Resources"

When clicked, it should:

Show a loading state

Run both scrapers (tools and news)

Combine the results into a single table

Update the Results Table:

Combine both tools and news into one table with these columns:

Item Type: "Tool" or "News"

Title/Name: The name of the tool or news title

Category: The category (or "Uncategorized" if none)

Description: Short description of the item

Source: Source website (e.g., "FutureTools.io" or news source)

Link: Clickable link to the item

UI/UX Improvements:

Show a counter of selected items

Disable the export button when no items are selected

Add a success message after export

Implementation Notes:

Keep the existing error handling and loading states

Ensure the export format is clean and easy to copy/paste into email/newsletter platforms

The export should maintain any links and formatting

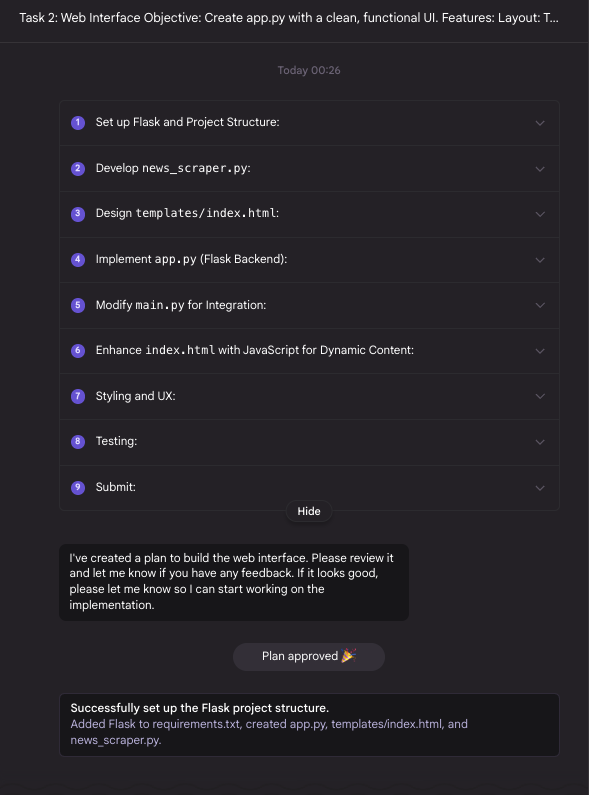

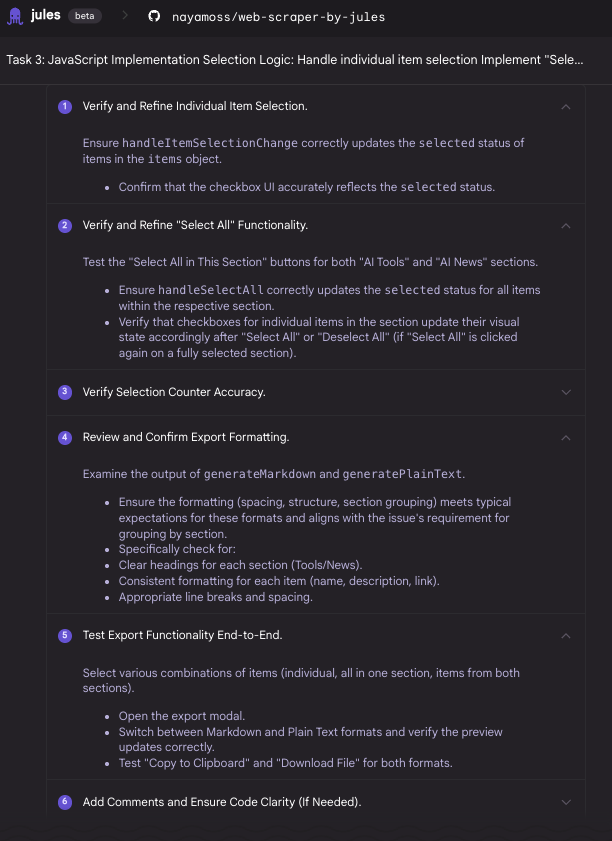

Consider adding a "Copy to Clipboard" button for easy pastingJules demonstrated sophisticated task decomposition, breaking this into logical components: data extraction layer, processing pipeline, web interface, and export functionality. The planning phase revealed strong architectural thinking, it proposed separate modules for scraping, data validation, and user interaction rather than monolithic code.

What impressed me most was its ability to anticipate edge cases I hadn’t explicitly mentioned. For instance, it automatically built in retry logic for failed HTTP requests and data validation for malformed JSON responses, details that often get overlooked in first implementations.

⚙️ Agentic Workflow Optimization

Through iterative testing, I identified optimal interaction patterns for agentic development that differ significantly from traditional AI coding workflows:

- Feature-Centric Task Design: Instead of “create database schema, add API endpoints, build UI,” use “implement complete user authentication system with OAuth2 integration”

- Sequential Dependency Management: When Task 5 relies on Task 2, explicit sequencing becomes critical because the agent won’t intuitively infer dependencies across separately submitted tasks as a human developer might

- Context Preservation: Maintain conversation continuity for complex features, as Jules can lose context if a single large feature is broken into too many separately initiated task threads

🔄 The Multi-Agent Experiment

I tested a fascinating hybrid approach using ChatGPT as task planner and Jules as executor. ChatGPT broke projects into numbered, feature-based tasks, then Jules executed each individually in sequence.

This is where things got really interesting. I started this experiment at 4 AM, giving Jules the first task, then wanted to see if it could handle multiple tasks simultaneously. What I discovered was that optimizing the workflow structure unlocked significantly better results.

When given complete autonomy, Jules made some interesting architectural choices that differed from my typical approach. For instance, it opted for a more modular separation for data processing features—while this increased initial complexity, it actually created better maintainability and clearer separation of concerns. The UI for data review was functional and well-structured, though it took a different approach to user flow than I had originally envisioned.

For feature-isolated tasks like web scraping or markdown export functionality, the code quality was genuinely impressive. Clean, well-commented, and handled edge cases better than what I see from many experienced developers. Jules consistently anticipated potential issues and built in proper error handling without being explicitly prompted.

🆚 Jules in Comparison with Traditional AI Tools

Jules’s asynchronous model offers clear advantages over real-time tools like Cursor: isolated cloud execution, automatic branch management, and clean pull requests with summaries and file-level diffs. The limitations become apparent during iterative debugging workflows. If you’re used to rapid feedback loops of real-time AI assistants, Jules feels more methodical and requires more patience for iteration. It’s less effective for exploratory coding sessions where you’re not sure exactly what you want to build. While I normally avoid juggling branches, having Jules commit code separately made version control feel seamless, and surprisingly helpful.

🚀 Production Readiness Assessment

After deploying Jules-generated code to staging, I was impressed by the quality, solid error handling, modular structure, and good documentation. Security was thoughtfully addressed with proper input validation. That said, Jules sometimes over-engineers. It used an observer pattern for a simple task and got stuck in a deployment loop due to an unresolved dependency conflict. I later realized I should’ve explored the overview config and repo commands features. They might’ve helped avoid some of the architectural mismatches I ran into.

🔮 Future Implications of Jules Agents

Jules represents early-stage autonomous development capabilities that will likely reshape how we think about development workflows. The integration potential with project management tools like Linear suggests future workflows where task assignment directly triggers autonomous development cycles.

What would be incredible is if it could integrate with Linear (a project management tool). Imagine having tasks where you could press a button to send the task to Jules, and then Jules would get started while giving you alerts in AI-powered editors like Cursor or Windsurf. That workflow would be transformative.

For teams considering Jules adoption, I’d recommend designing development processes around feature-complete task assignment rather than granular instruction sets. Think by feature, not by task list. If you have one feature with three tasks and three subtasks, give that all to Jules in one go and let it handle the breakdown.

Jules succeeds most when treated as an autonomous contractor rather than an interactive assistant, assign complete features, provide clear specifications, and review outputs systematically. For teams ready to adapt their workflows, Jules doesn’t just offer a preview; it provides a functional glimpse into a future where development automation significantly reshapes how software is built.

What are your thoughts on the future of agentic coding? Have you experimented with autonomous development tools, and how do they compare to your traditional workflows?

Leave a Reply